In which we get our old test suites to run in this new world of containers.

A Barrel Full of Ruby Cucumbers #

Remember all those Cucumber tests we wrote so very long ago?

124 scenarios (124 passed)

1241 steps (1241 passed)

50m36.933s

Now that we have the database and game server running in Docker containers, might as well get the tests running in that environment as well.

Previously, the tests interacted with the game server in a few ways:

- Directly launching/killing the

DragSpinExpexecutable. - Writing to the

.configfile. - Reading/writing to the game database.

- Telnetting into the game and interacting as a user would.

So let’s see how we have to address these in the test code.

Game Server Goes Up, Game Server Goes Down #

The Before Times: Co-located on a Windows server with the game server executable, using taskkill

and

Process.spawn() to bring it up and down:

|

|

|

|

|

|

|

|

|

|

Let’s replace those with the appropriate Docker commands, calling them using Open3.capture3()

so

we can easily get stdout and stderr

to use in debugging:

|

|

|

|

|

|

|

|

Hmm, could use some refactoring. But wait a minute, why

docker exec --detach seitan-spin-gameserver-1 "./entrypoint.sh"? In order to bounce the game

server executable, while retaining access to the .config file, we need to split that container’s

“running” status into “container running” and “game server executable running”. So we override the

entrypoint in docker-compose.yml:

|

|

And now starting the container doesn’t start the executable, and we can call ./entrypoint.sh when

needed (once we’ve set the .config file and database up correctly for the test).

Reconfigure as Needed #

There are a number of configuration options for the game server that live in the .config file:

|

|

Since it’s only read by the executable at startup, any changes need to be made in this file before it’s executed. To that end, we’ll need to map that folder outside of the container.

|

|

|

|

I did find the process of doing this in Docker Compose a bit confusing, to be honest. It’s possible (probable) that this isn’t actually the best way to do it.

With that volume set up, and making sure the container is running, we know where to find the file and how to adjust it:

|

|

Once the config file is set up however we need it, we can then call start_server() to actually

launch the game server executable.

A Base for Data #

We were already operating at arm’s length from the database, using TinyTds for the connection and sending raw SQL queries.

|

|

We were using calls to sqlcmd for the heavy lifting like loading the entire database; but since

we have the databases baked into the container image, we don’t need to do that anymore.

The new issue with talking to the database is that the game server and the tests will do so in

different ways. On the container network, the game server will make calls to sql on port 1433.

From the outside, the tests will call to port 27999.

|

|

|

|

Wait a minute, why not just map the same port, so it’s 1433 regardless? It’s a little safety tip that I’ve picked up: make sure that the code you write is executing where you think it is. If I write something I thought would run in the test context, and it runs inside one of the containers instead, the wrong port number will cause it to fail, and I’ll know my mistake. This rule of thumb has saved me hours of debugging on many occasions.

Log In and Play #

Similarly, we map the game server’s telnet port 3000 to 27998 outside the containers:

|

|

Simple enough to just introduce a DS_PORT environment variable for it:

|

|

Make It Work #

Now we put together the bash version of the run_tests.cmd script.

|

|

We’ll come back to that domain logic in a bit. But does it work when I run it locally?

$ docker images -a

REPOSITORY TAG IMAGE ID CREATED SIZE

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

$ ./run_tests.sh features/temp.feature

localdomain

[+] Running 2/2

⠿ gameserver Pulled 17.3s

⠿ sql Pulled 21.5s

[+] Running 3/3

⠿ Network seitan-spin_default Created 0.1s

⠿ Container seitan-spin-sql-1 Healthy 5.9s

⠿ Container seitan-spin-gameserver-1 Started 6.1s

Using rake 13.0.6

Using ansi-sys-revived 0.8.4

...

Using webrick 1.8.1

Using win32-process 0.10.0

Bundle complete! 10 Gemfile dependencies, 41 gems now installed.

Use `bundle info [gemname]` to see where a bundled gem is installed.

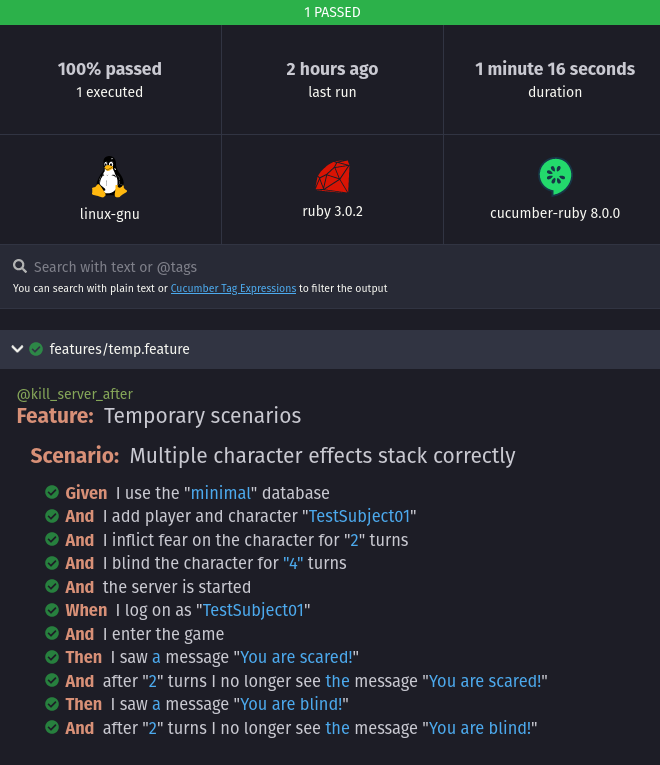

@kill_server_after

Feature: Temporary scenarios

Scenario: Multiple character effects stack correctly # features/temp.feature:4

Given I use the "minimal" database # features/steps/server_steps.rb:21

Getting ID from player TestSubject01_name

Player ID for TestSubject01_name is 2

And I add player and character "TestSubject01" # features/steps/account_steps.rb:12

Getting ID from player TestSubject01_name

Player ID for TestSubject01_name is 2

And I inflict fear on the character for "2" turns # features/steps/character_steps.rb:217

Getting ID from player TestSubject01_name

Player ID for TestSubject01_name is 2

And I blind the character for "4" turns # features/steps/character_steps.rb:222

And the server is started # features/steps/server_steps.rb:39

When I log on as "TestSubject01" # features/steps/login_steps.rb:190

And I enter the game # features/steps/login_steps.rb:266

Then I saw a message "You are scared!" # features/steps/character_steps.rb:145

And after "2" turns I no longer see the message "You are scared!" # features/steps/character_steps.rb:339

Then I saw a message "You are blind!" # features/steps/character_steps.rb:145

And after "2" turns I no longer see the message "You are blind!" # features/steps/character_steps.rb:339

1 scenario (1 passed)

11 steps (11 passed)

1m16.731s

And we even get nice-looking HTML output:

Testing in the CI Environment #

In order to have this run in one of GitLab’s handy CI containers, we have to revisit our good

friend, the

Docker-in-Docker container

(

Gitlab’s docs).

While the tests run in one Docker container, they will bring up the server containers in another. The

hostname for it will be docker, so we use that instead of localhost when interacting with

the mapped ports (27998 and 27999).

So that explains this part of the run_tests.sh script:

|

|

Your mileage may vary; especially if your

home servers have a DNS domain other than local or localdomain. The .gitlab-ci.yml file got

kind of interesting:

|

|

That’s not even the whole thing, but we can stop there for now.

In the .common_test definition

that’s common to all of the test jobs: Set the environment variables to make the whole

Docker-in-Docker thing work (with lots of explanatory comments, copied from GitLab’s docs). Note

the service definition that actually brings up the separate “docker” container. In the

before-script, add all of the pieces

we’ll need to install and run the set of Ruby gems we rely upon (some of which need to build

native extensions). Lastly, make sure we

always keep the Cucumber output files as artifacts.

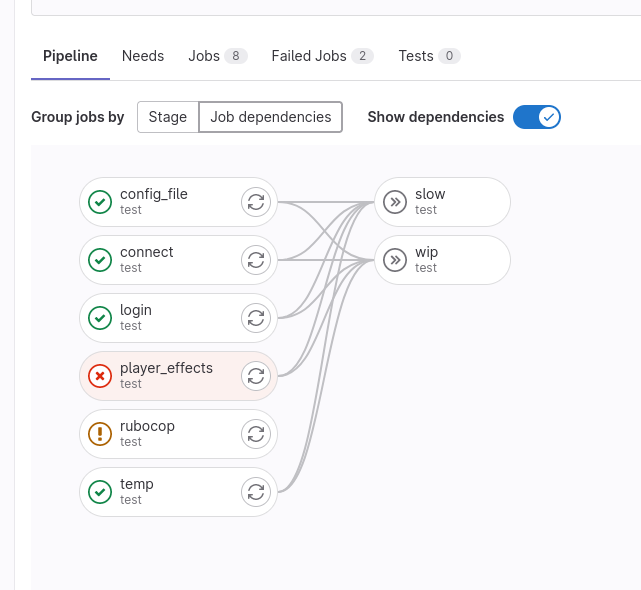

Then we define some separate test jobs that extend .common_test, with the only real difference

being the feature file that they take their scenarios from. These can all run in parallel, in

theory. So far so good.

|

|

The slow and wip jobs are a little different. They have their own bash scripts, and we use the

needs clause to run them only if all of the standard tests pass. In theory (again), slow

contains the long-runtime tests; no need to waste the time if other stuff is broken. And wip

should contain tests that aren’t quite reliable yet; not helpful to run on top of a shaky

foundation.

In the run_wip.sh script we also have all of the debug noise

turned up to 11:

|

|

Not Quite Perfect Yet #

The player_effects test job is failing, for a kind of surprising reason:

| Protection_from_Blind_and_Fear | 7 | Blind | 1 | | |

Getting ID from player TestRP01_name

Player ID for TestRP01_name is 2

Getting ID from player TestRP01_name

Player ID for TestRP01_name is 2

| Protection_from_Blind_and_Fear | 8 | Fear | 1 | | |

ERROR: Job failed: execution took longer than 1h0m0s seconds

I guess my assessment of which tests are “slow” might be outdated. Or I just need to bundle them differently. Tomorrow.

One More Thing #

|

|

Why is this @slow? Can’t computers roll dice really quickly? And at this point we’re not in the

game, so we’re not waiting for the round timer. Even though we’re rolling many times to try to

(somewhat) verify the constraints, it shouldn’t be taking that long.

Normally we need to be careful with the telnet connection, because we can easily get false

positives as far as when the server is done sending text. We wait for a prompt, but what if

that prompt also appears somewhere in the output? But in the case of rolling stats, we know

we’re done when we see the Roll again (y,n): come across. So we specifically set the

Waittime to zero:

|

|

Just to be sure, let’s time that telnet_command() call, see if it’s taking longer than we’d expect:

|

|

$ grep "Time waited for telnet" cucumber_wip.out

Time waited for telnet response: 1.15628657 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.10263682 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.040668261 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.10236742 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.100364496 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.102931419 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.122063689 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.104850838 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.103263606 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.107096273 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.10310023 # features/lib/misc_helper.rb:114:in `telnet_command'

Time waited for telnet response: 1.104339874 # features/lib/misc_helper.rb:114:in `telnet_command'

...

Never less than a second.

It certainly doesn’t look like our override of Waittime is helping much. Just to be sure, let’s

check out the source of the cmd() method in the net/telnet library.

|

|

Oh, Waittime is not actually one of the options that it pays attention to in the parameter hash.

So my one-second default is in effect even when I try to override it with a zero. I can,

however, pass it directly to waitfor():

|

|

Now I can write a telnet_command_fast() that bypasses cmd() and goes straight to waitfor()

with a zero wait time:

|

|

...

Time waited for telnet response: 0.102208506 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.103160697 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.098152807 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.138335645 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.061805784 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.103061699 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.140917246 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.05900543 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.098719397 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

Time waited for telnet response: 0.14280107 # features/lib/misc_helper.rb:129:in `telnet_command_fast'

...

Much better.